Addressing Challenges of Explainable AI in Healthcare

In the world of healthcare, the excitement around artificial intelligence (AI) is real. But let's face it, making the most of AI in medicine is easier said than done.

The key challenges with implementing AI in medicine include:

- Need for extensive and comprehensive datasets for effective training of DL models

- High demands on storage and computational resources for DL approaches

- Difficulties in deploying DL solutions in healthcare due to data quality and availability issues

- DL models’ lack of transparency, often referred to as “black box” systems, which complicates understanding of their decision-making processes

- Concerns regarding the fairness and trustworthiness of AI applications in sensitive sectors such as medicine

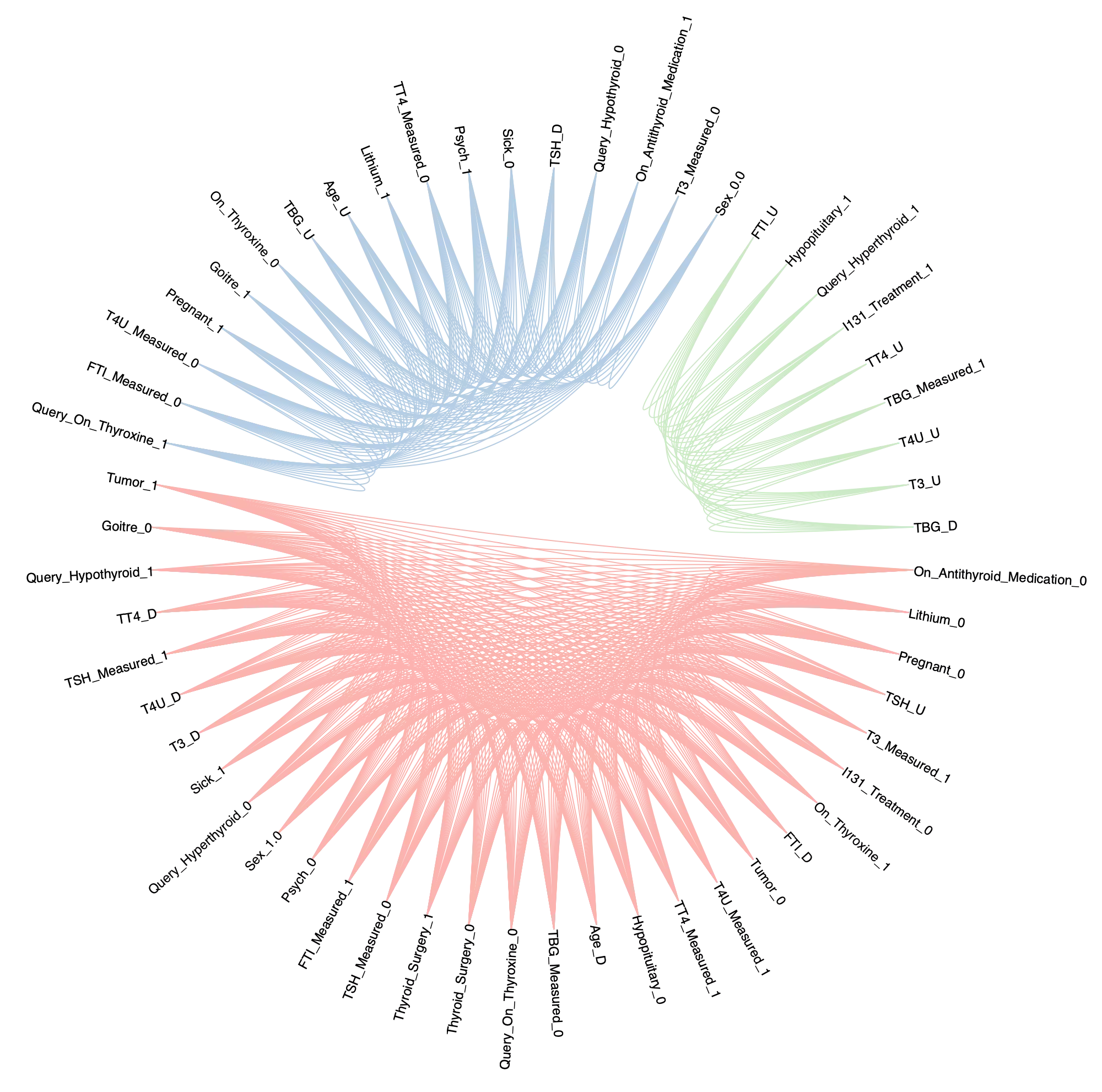

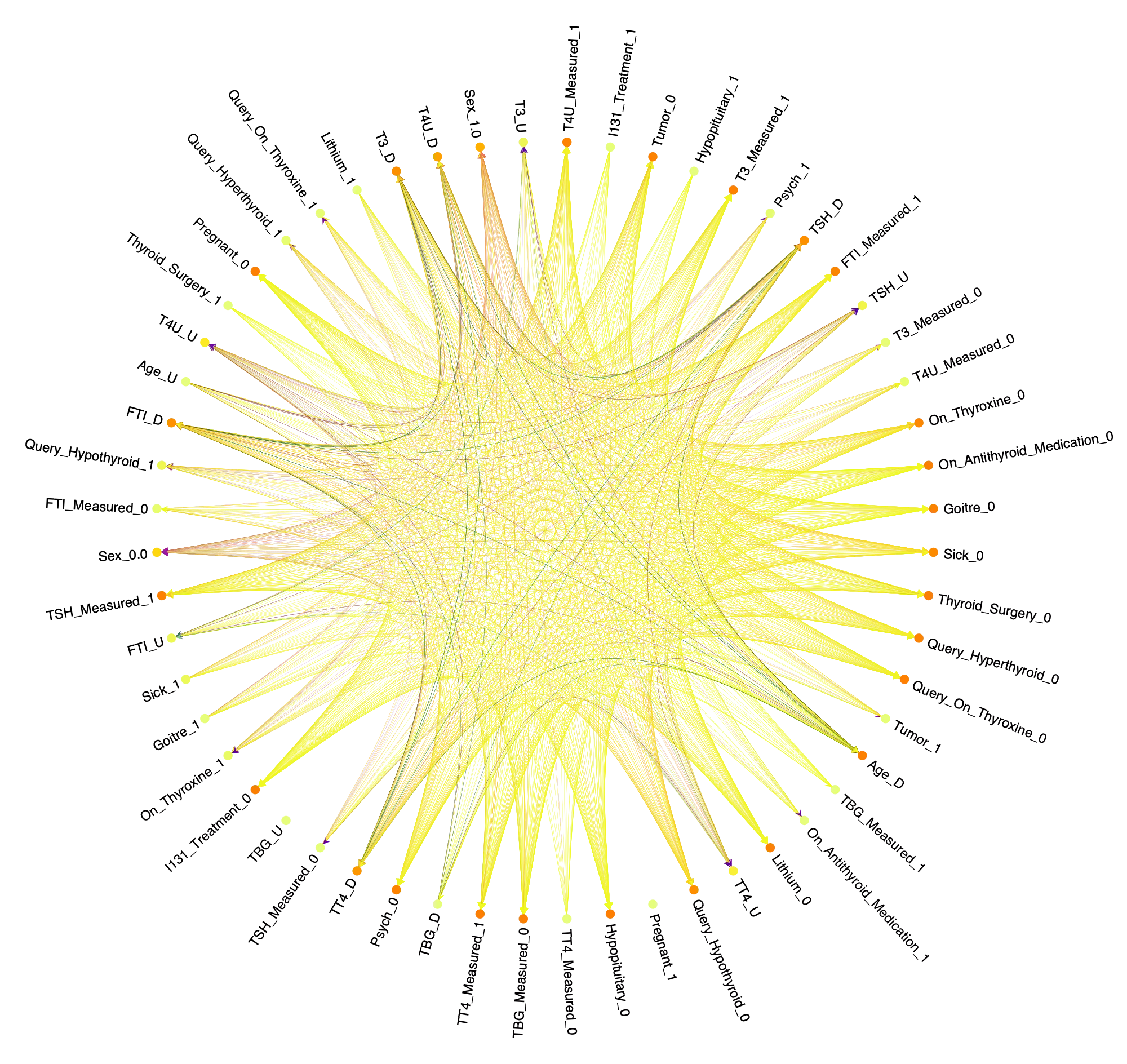

In response, Sano’s Personal Health Data Science team, led by Dr. Jose Sousa, has embarked on an ambitious project to address these issues. They’re pioneering the Comprehensive Abstraction and Classification Tool for Uncovering Structures (CACTUS), a tool tailored to enhance the applicability and comprehensibility of AI in healthcare.

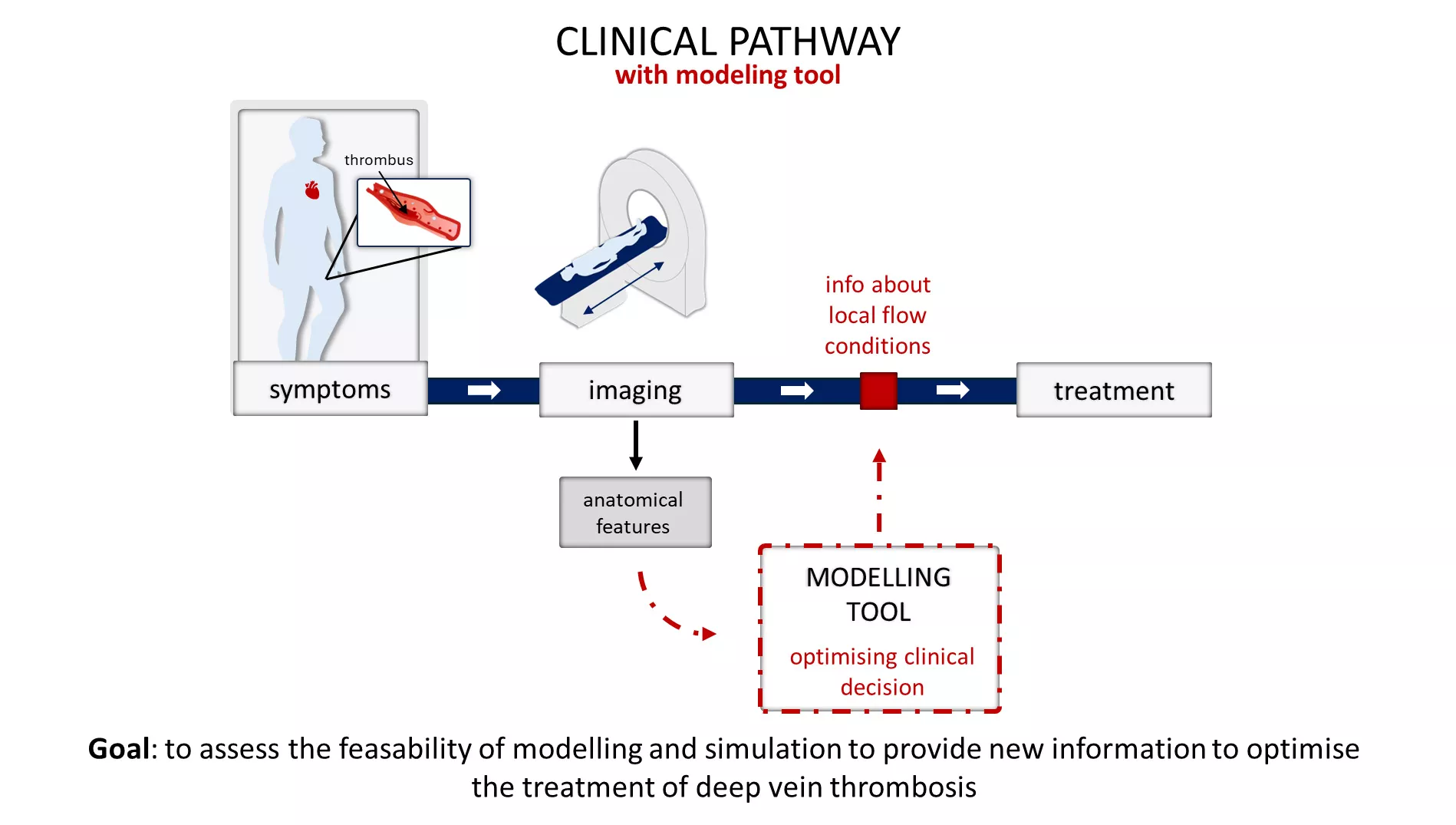

CACTUS proposes a fresh classification methodology that not only scales but also ensures transparency and adaptability in explainable AI. It simplifies complex data into more manageable representations, facilitating easier analysis and interpretation.

This venture into developing explainable AI techniques is aimed at achieving a grand vision: to create a personal health representation that supports individual decision making by providing perspectives on future wellbeing.

We invite you for reading and discussion on the paper “CACTUS: a Comprehensive Abstraction and Classification Tool for Uncovering Structures” which presents technical details of the research. Authors: Luca Gherardini, Varun Ravi Varma, Karol Capała, Roger Woods, Jose Sousa.

The paper was recently published by ACM (Association for Computing Machinery) Transactions on Intelligent Systems and Technology (TIST).

READ HERE