Exploring Bias and Responsibility in Medical AI

Sano at Poland Healthcare Datathon 2025

At the end of September Paulina Tworek and Justyna Andrys‑Olek from Sano’s Computational Intelligence Team represented our centre at the Poland Healthcare Datathon in Gdańsk — an event bringing together professionals in data science, medicine, and artificial intelligence to advance data‑driven healthcare innovation.

Insightful Talks and Key Discussions

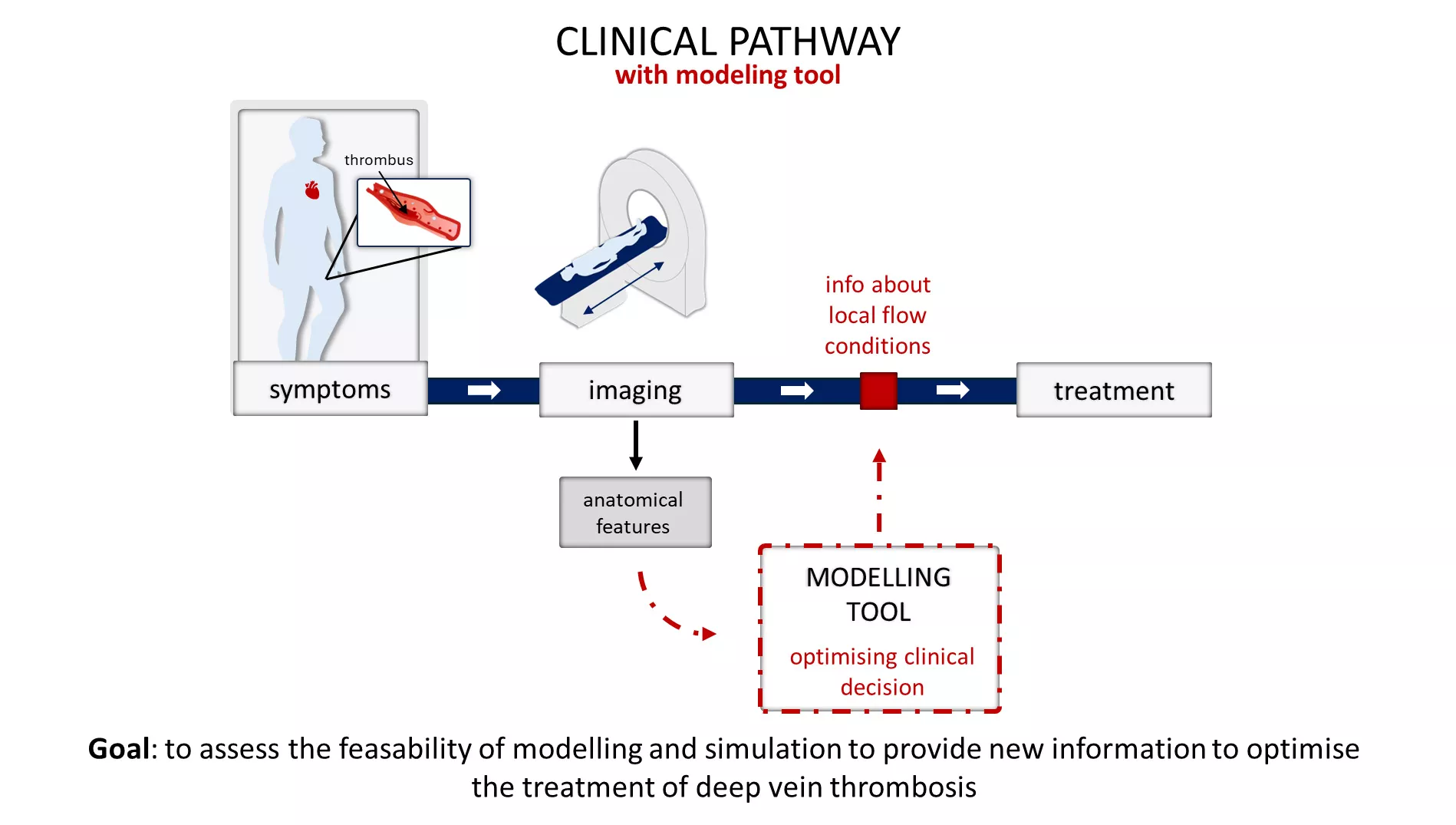

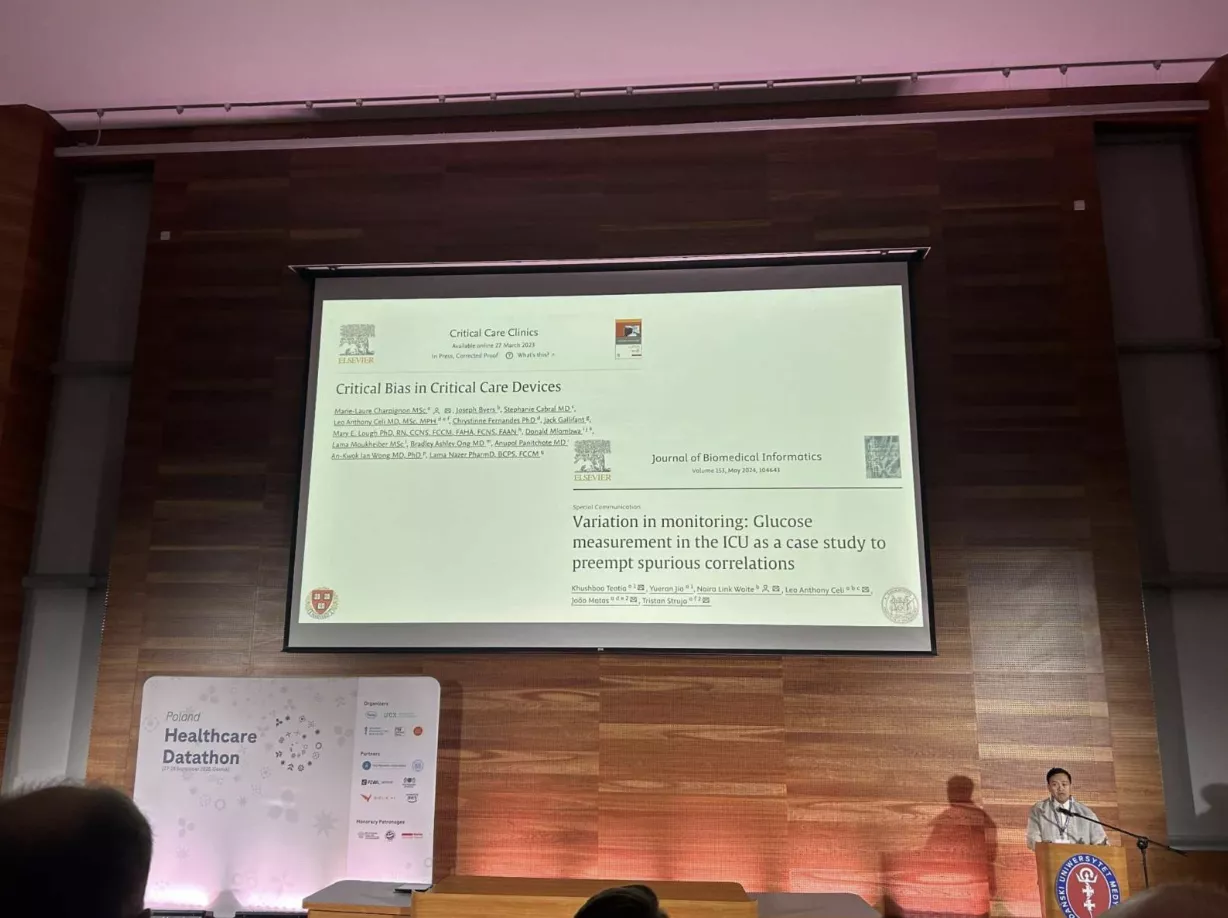

The programme featured thought‑provoking sessions, including an opening lecture by Dr. Leo Anthony Celi from the MIT Laboratory for Computational Physiology. His talk addressed inequality and bias in AI models, offering practical strategies to minimise their impact in increasingly automated clinical environments.

A number of presentations throughout the event centred on responsible and transparent AI — seen as one of the pillars shaping the future of medicine and pharmacy. Participants explored key questions: How can we ensure fairness in AI systems? How do we balance innovation with patient safety and ethical responsibility?

Reflections from the Sano Team

Reflecting on the conference, Justyna Andrys‑Olek shared:

“One of the greatest challenges in the ongoing digital transformation of healthcare — both in medicine and pharmacy — is the bias in the data used to train AI models. This bias often stems from underrepresented patient groups and can be difficult to detect. Addressing it requires effort, awareness, and diversity in datasets to ensure that models serve a broad population, not just a few.”

Her insights highlight the importance of comprehensive datasets that reflect diversity across gender, age, ethnicity, and socioeconomic background — essential for building reliable and equitable AI tools.

Reinforcing Sano’s Commitment to Ethical AI

Participating in the Poland Healthcare Datathon strengthened Sano’s dedication to developing ethical, trustworthy, and inclusive AI in medicine.